We have all seen the demo: The sales rep uploads a messy 50-page MSA, clicks a button, and voila—the AI highlights every risky clause in red and suggests perfect fallback language.

It looks like magic. But what they don't tell you is that the demo environment was pre-trained on that specific document type for months. When you upload your company's unique, messy, historically inconsistent contracts on Day One, the AI will likely choke.

Pre-Trained vs. Custom Models

Most CLM vendors offer "Pre-Trained Models" for standard concepts like "Governing Law" or "Termination for Convenience." These work fine for generic agreements.

But your company doesn't care about generic risks. You care about specific risks:

- "Does this Indemnification clause cover 'Gross Negligence'?"

- "Is the Liability Cap tied to 'Fees Paid in the last 12 months' or 'Total Contract Value'?"

To answer these questions, the AI needs to be taught your playbook. And who teaches the AI? You do.

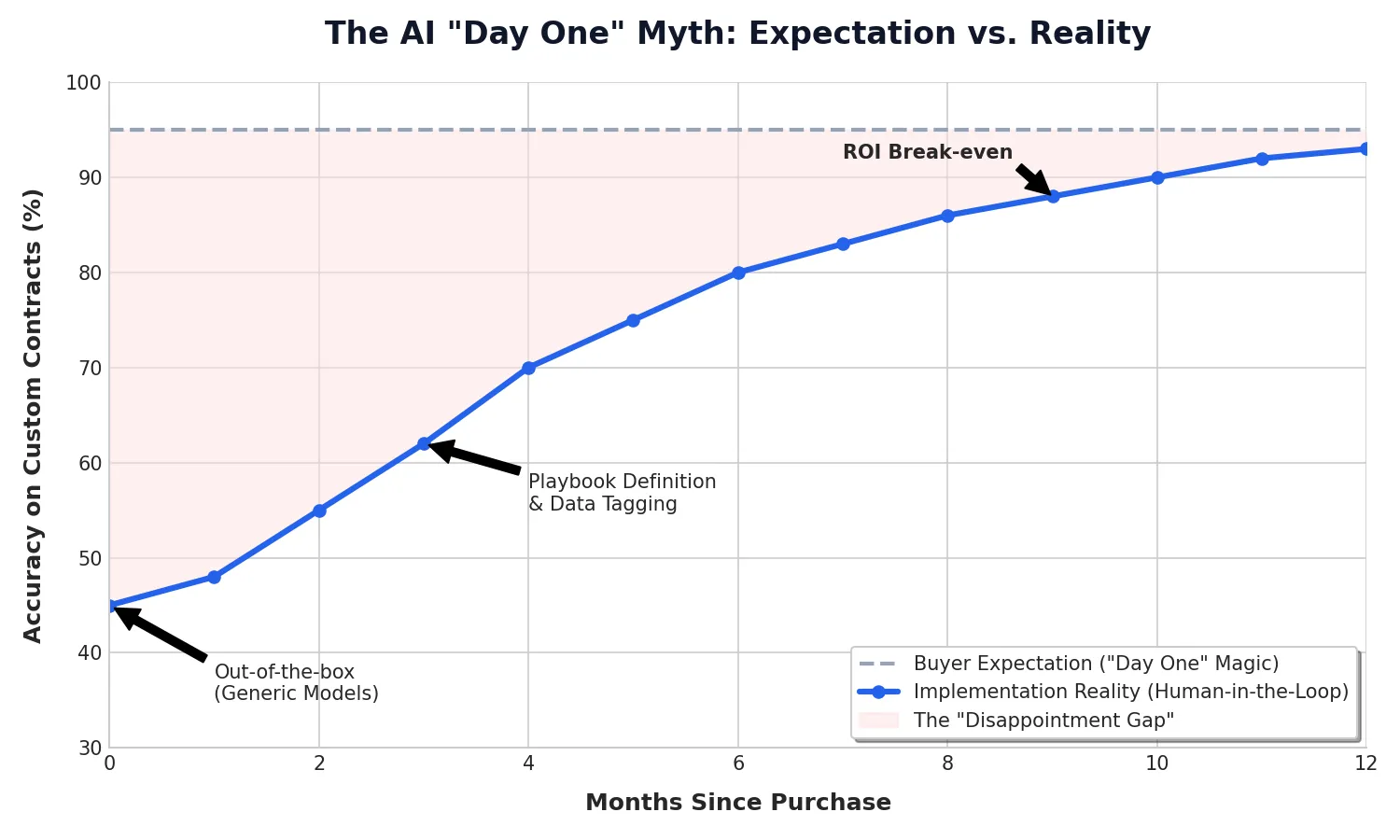

The Disappointment Gap

The painful reality of training an AI model from "dumb" to "useful."

The Hidden Cost: "Human-in-the-Loop"

The most expensive part of AI isn't the software license; it's the Senior Attorney time required to validate the results.

You cannot assign a junior paralegal to train the AI, because they might not know if the AI's suggestion is legally sound. You need your best lawyers to spend hours clicking "Correct" or "Incorrect" on AI predictions. This is the "Human-in-the-Loop" requirement that destroys ROI calculations.

The "Hallucination" Risk

Generative AI (like GPT-4) is great at writing fluent text, but it can be a confident liar. In legal tech, we call this a "hallucination."

If the AI drafts a clause that sounds legal but references a non-existent regulation, and your tired lawyer approves it at 5 PM on a Friday, you have just introduced a massive liability into your contract.

The Consultant's Takeaway: Buy AI for what it can do in Year 2, not Day 1. And budget for 100+ hours of legal engineering time to get it there. If you aren't willing to do the homework, don't buy the tool.